Prompt Token Counter for OpenAI Models

In the realm of working with OpenAI models such as GPT-3.5 and others, understanding and managing prompt tokens is of utmost importance.

Overview

When we interact with these language models, our input, known as the prompt, is processed in the form of tokens. A token can be a word, a character, or even a subword, depending on the tokenizer used. For instance, the sentence "I love natural language processing" can be broken down into individual word tokens like ["I", "love", "natural", "language", "processing"]. These tokens are what the model uses to understand and generate responses.

However, each OpenAI model has a specific limit on the number of tokens it can handle in a single interaction. For example, GPT-3.5-turbo has a maximum limit of 4096 tokens. If we exceed this limit, our request might be rejected or we could face issues with cost, as these models often charge based on the number of tokens used.

Core Features

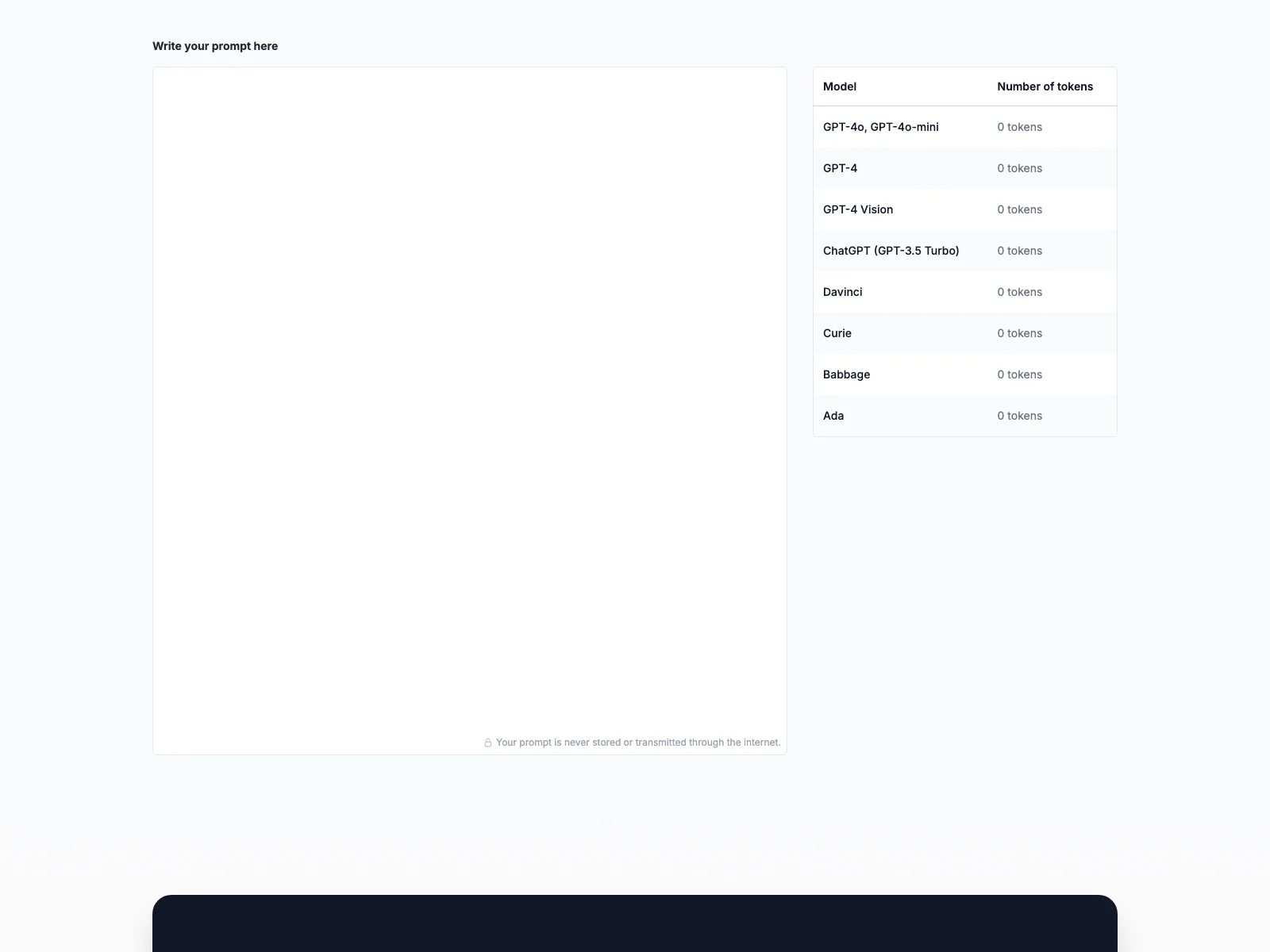

The Prompt Token Counter serves as a crucial tool in this context. It allows us to accurately count the number of tokens in our prompts before sending them to the model. This way, we can ensure that we stay within the model's token limits.

Not only does it count the tokens in the prompt itself, but it also takes into account the potential tokens in the model's response. If we anticipate a long response, we might need to adjust our prompt accordingly by truncating or shortening it to fit within the overall token limit.

Basic Usage

To effectively use the Prompt Token Counter, first, we need to familiarize ourselves with the token limits of the specific OpenAI model we're using. Then, we preprocess our prompt using techniques similar to those we'll use during the actual interaction, with the help of tokenization libraries like the OpenAI GPT-3 tokenizer.

After preprocessing, we count the number of tokens in the prompt, remembering that tokens include not only words but also punctuation, spaces, and special characters. If the prompt exceeds the model's token limit, we iteratively refine and shorten it until it fits within the allowed count.

In comparison to other tools or methods of managing interactions with OpenAI models, the Prompt Token Counter provides a straightforward and efficient way to ensure that our prompts are optimized for both cost and successful interaction with the model. It helps us avoid unnecessary expenses due to excessive token usage and ensures that our requests are not rejected because of going over the token limit.

Overall, the Prompt Token Counter is an essential tool for anyone working with OpenAI models, enabling them to make the most of the model's capabilities while staying within the constraints of token limits and cost considerations.